Agentic Patterns: How We Build AI Systems at Lawpath

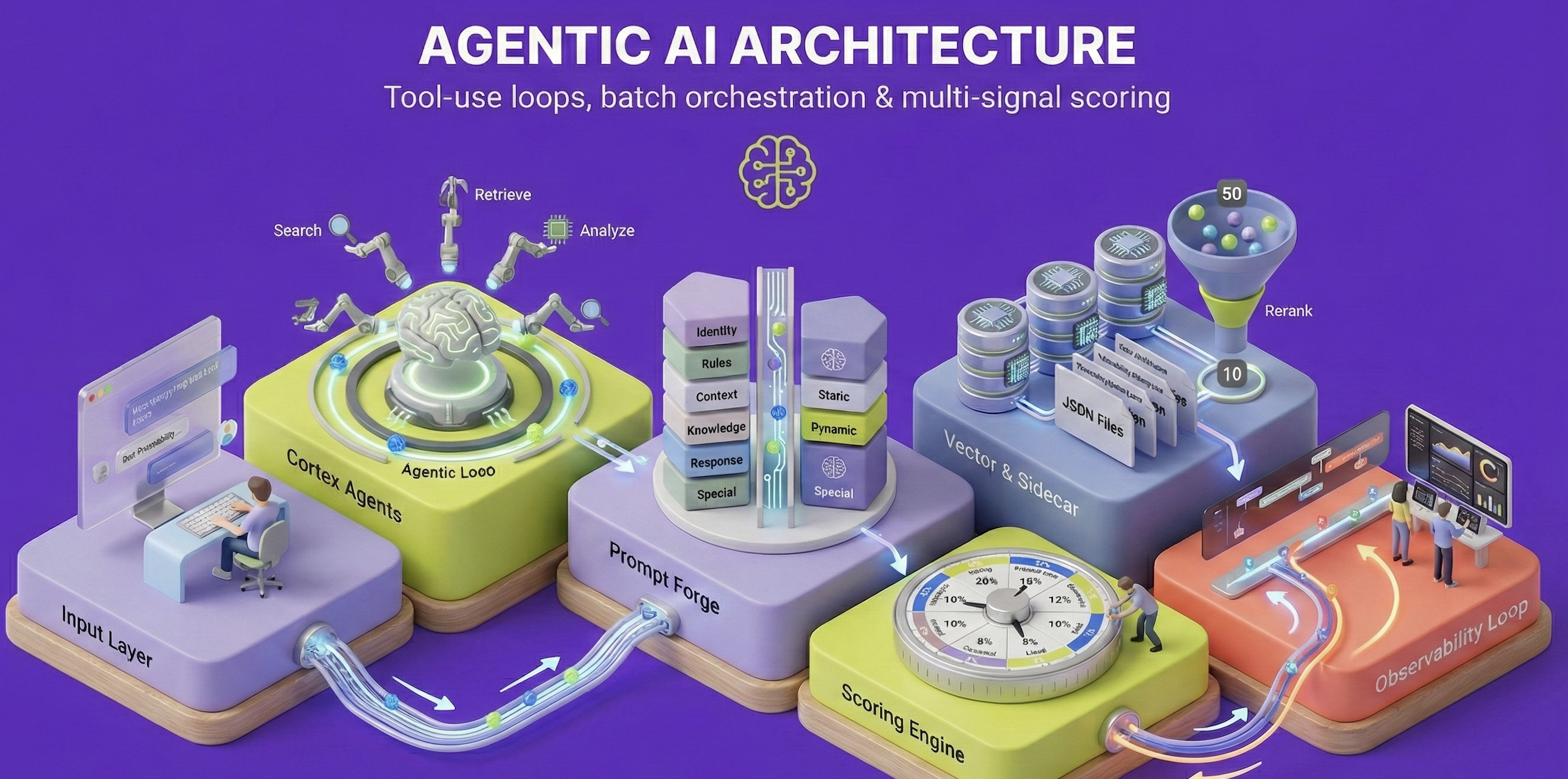

A deep dive into the architectural patterns powering Lawpath Cortex, from tool-use loops and batch orchestration to multi-signal scoring and streaming conversations.

At Lawpath, we’ve been building AI-powered systems that help hundreds of thousands of Australian businesses navigate legal and accounting complexities. Over time, we’ve developed a set of patterns that form the backbone of our AI infrastructure: Lawpath Cortex.

These patterns didn’t emerge in isolation. They’re grounded in research from teams at Google, Anthropic, and Stanford, and refined through production use at scale. This post explores how we’ve adapted established agentic design patterns to the specific challenges of legal AI, where accuracy isn’t optional and trust must be earned with every interaction.

Cortex comprises two core subsystems:

- Cortex Agents: Real-time AI agents for user-facing interactions

- Cortex Signals: Batch processing, scoring, and customer intelligence

The Research That Shaped Our Approach

Before diving into implementation, it’s worth understanding the research that informed our thinking.

Andrew Ng’s widely-cited work identifies four foundational patterns for agentic systems: Reflection, Tool Use, Planning, and Multi-Agent Collaboration. His key insight is that “agentic” exists on a spectrum, from low autonomy (predetermined steps) to high autonomy (self-designed workflows). For legal applications, we deliberately operate in the middle: structured enough for reliability, flexible enough for complex queries.

The ReAct framework (Yao et al., ICLR 2023) established the dominant paradigm for combining reasoning with action. ReAct interleaves “thought” steps with “action” steps, creating interpretable execution traces. On knowledge-intensive tasks like HotpotQA, ReAct significantly outperformed both pure reasoning and pure action approaches by grounding each step in observable results.

“Reasoning traces help the model induce, track, and update action plans as well as handle exceptions, while actions allow it to interface with external sources to gather additional information.”

— arxiv.org/abs/2210.03629

For self-correction, Madaan et al.’s Self-Refine (NeurIPS 2023) demonstrated that iterative feedback loops improve output quality by approximately 20% across diverse tasks, without additional training. The pattern is simple: generate, critique, refine, repeat.

These foundations inform every pattern in Cortex.

1. Tool-Use Agentic Loop

The foundation of Cortex Agents is the classic agentic loop: the LLM iteratively calls tools until it has enough information to respond. This directly implements the ReAct pattern, interleaving reasoning with action.

User Query → LLM → Tool Call → Execute → Observe → LLM → ... → Final ResponseOur Legal Research agent demonstrates this pattern well. It uses specialised tools like search_case_law, find_precedents, and analyze_treatment to research Australian case law before providing advice. Extended thinking is enabled for complex legal reasoning, allowing the model to “think aloud” before committing to actions.

const legalTools: CortexTool[] = [

{

name: 'search_case_law',

description: 'Enhanced search with hybrid retrieval, query expansion, and citation tracking.',

input_schema: {

type: 'object',

properties: {

query: { type: 'string' },

jurisdiction: { type: 'string', enum: ['commonwealth', 'new_south_wales', ...] },

document_type: { type: 'string', enum: ['decision', 'primary_legislation', ...] },

},

required: ['query'],

},

},

// ... additional tools

];The agent processes responses recursively, handling tool calls until it receives a final text response:

private async processResponse(message, ...): Promise<{ advice: string }> {

for (const content of message.content) {

if (content.type === 'tool_use') {

const result = await this.handleToolUse(content.name, content.input);

// Continue conversation with tool results

}

}

// Recurse until no more tool calls

return await this.processResponse(followUp, ...);

}This recursive structure mirrors what the ReAct paper describes as “dynamic reasoning to create, maintain, and adjust high-level plans for acting.” Each tool result becomes an observation that informs the next reasoning step.

Web Search with Quality Gates

One of our most critical tools is web search. Following the Self-Refine principle of iterative improvement, we use a three-phase pattern with quality gates:

- Query Analysis: A lightweight model classifies intent and selects relevant domains

- Domain-Filtered Search: Search API with Australian legal and government domains prioritised

- Quality Gate + Fallback: If results don’t meet thresholds, retry without domain filters

private assessQuality(result: InternalSearchResult): QualityAssessment {

const passed =

citationCount >= 2 &&

contentLength >= 300 &&

(hasAuthoritativeSources || citationCount >= 3);

return { passed, citationCount, hasAuthoritativeSources };

}The domain registry maintains tiered authority: .gov.au sources rank highest, followed by legal publishers, then general sources. This ensures our agents cite authoritative Australian legal sources rather than generic web content.

Why this matters: Andrew Ng emphasises that reflection with external feedback dramatically outperforms pure self-reflection. Our quality gates provide that external signal. If search results don’t meet objective thresholds, the system retries with different parameters rather than proceeding with poor data.

2. Domain-Specific Retrieval: Beyond Generic Embeddings

A critical component of our agentic infrastructure is semantic retrieval from our proprietary legal knowledge corpus. This is where generic approaches fall over.

Standard embedding models struggle with legal text because, from a general language perspective, legal jargon appears relatively similar. This makes it difficult to disambiguate relevant content from irrelevant material. A contract termination clause and a contract renewal clause might have high cosine similarity despite being semantically opposite.

We use domain-specific legal embeddings optimised for Australian legal and accounting content. Rather than general-purpose models, we leverage Voyage AI’s legal-optimised embeddings (voyage-law-2), which significantly outperform standard models at disambiguating relevant legal text:

const VOYAGE_MODEL = 'voyage-law-2';

const EMBEDDING_DIMENSION = 1024;

export async function generateEmbedding(

text: string,

inputType: 'document' | 'query' = 'document'

): Promise<number[]> {

const response = await fetch(VOYAGE_API_URL, {

method: 'POST',

headers: { 'Authorization': `Bearer ${API_KEY}` },

body: JSON.stringify({

input: text,

model: VOYAGE_MODEL,

input_type: inputType, // 'query' for search, 'document' for indexing

truncation: true,

}),

});

return (await response.json()).data[0].embedding;

}The input_type parameter is crucial: we use 'document' when indexing and 'query' when searching. This asymmetric embedding approach, documented in Voyage’s research, improves retrieval quality by optimising each vector for its role.

Two-Stage Retrieval with Reranking

Cosine similarity provides a good first-pass ranking, but for legal content we add a reranking stage that provides more accurate relevance scoring:

Query → Embedding → Vector Search (top 50) → Rerank (top 10) → Final Resultsexport async function rerankDocuments(

query: string,

documents: string[],

topK: number = 10

): Promise<RerankResult[]> {

const response = await fetch(RERANK_API_URL, {

method: 'POST',

headers: { 'Authorization': `Bearer ${API_KEY}` },

body: JSON.stringify({

query,

documents,

model: 'rerank-2',

top_k: topK,

}),

});

return (await response.json()).data;

}This two-stage approach reduces irrelevant material in top results by approximately 25% compared to embedding similarity alone, while using 1/3 of the embedding dimensionality for storage efficiency.

Faceted Customer Narratives

Beyond case law, we store faceted narratives about each customer in the vector database. These narratives capture different aspects of a customer’s relationship with Lawpath:

| Facet | Content |

|---|---|

| Plans | Subscription history and current plan details |

| Payments | Billing patterns and payment health |

| Documents | Document usage and contract types |

| Activity | Engagement patterns and recent actions |

| Calls | Advisory consultation history |

| Compliance | ASIC filings and compliance status |

| Formations | Company formation details |

Each facet is embedded separately, enabling semantic search across customer context:

export async function fetchFacetNarratives(

customerId: string

): Promise<Map<FacetType, string>> {

const facetTypes = ['plans', 'payments', 'documents', 'activity',

'calls', 'compliance', 'formations'];

const facetIds = facetTypes.map(type => `facet_${customerId}_${type}`);

const response = await vectorIndex.namespace('facet').fetch(facetIds);

const narratives = new Map<FacetType, string>();

Object.values(response.records).forEach((record: any) => {

narratives.set(record.metadata.facet_type, record.metadata.narrative);

});

return narratives;

}This enables our agents to retrieve relevant customer context semantically, rather than relying on exact field matching. When an agent needs to understand a customer’s compliance history, it retrieves the compliance narrative, not a raw database dump.

3. Prompt Forge: Modular System Prompts

As our agents grew, so did our system prompts. A single Legal Research agent prompt exceeded 8,000 tokens. Managing this complexity required structure.

We built Prompt Forge to construct prompts from six standardised sections:

| Section | Purpose |

|---|---|

| Identity & Role | Who the agent is and how it should behave |

| Critical Rules | Non-negotiable constraints |

| User Context | Dynamic per-user information |

| Knowledge Base | Platform documentation and pricing |

| Response Framework | Output formatting guidelines |

| Special Handling | Edge cases and exceptions |

We use markers to separate static (cacheable) from dynamic content:

[[LP:STATIC_START]]

... cacheable system prompt ...

[[LP:STATIC_END]]

[[LP:DYNAMIC_START]]

<user_context>{{user_context}}</user_context>

[[LP:DYNAMIC_END]]Why the separation matters: Anthropic’s prompt caching provides significant cost reduction for static content. By explicitly marking boundaries, we ensure cache hits on the expensive system prompt while injecting fresh user context per request.

The builder publishes compiled prompts to object storage and our observability platform for version control, with dynamic data (document links, pricing) injected at build time. This gives us Git-like history for prompts, which is critical when debugging why an agent’s behaviour changed.

4. Real-Time Customer Intelligence Engine

Cortex Signals is our real-time customer intelligence engine. It implements what Microsoft’s Agent Factory research calls “orchestrated multi-signal processing”, combining multiple data streams into actionable intelligence.

Signal Categories

The engine computes multiple signal categories in real-time:

| Signal Category | Purpose |

|---|---|

| PQL (Product Qualified Lead) | Conversion likelihood for free users based on behaviour patterns |

| Churn Probability Index | Risk scoring for paying customers with recommended interventions |

| Next Best Action | AI-generated recommendations (consultation, document, quote) |

| Service Propensity | Which services (legal, accounting, compliance) the customer likely needs |

| Conversion Signals | High-intent events accumulated over time |

Multi-Signal Scoring Architecture

Each score combines weighted signals from multiple data sources. Our Churn Probability Index uses a 9-component ensemble, a design informed by research showing that ensemble approaches consistently outperform single-signal models:

| Component | Weight | What It Measures |

|---|---|---|

| Billing | 28% | Payment health, failed charges, arrears |

| Engagement | 18% | Login frequency and recency |

| Behavioural | 12% | Product usage and value realisation |

| RFM | 10% | Recency-Frequency-Monetary segmentation |

| Trend | 8% | Engagement direction over time |

| Seasonal | 8% | Expected activity for Australian calendar |

| Lifecycle | 6% | Customer age and maturity |

| Intent | 6% | Cancellation signals |

| Health | 4% | Positive indicators (inverted) |

The weighting is empirically derived: billing signals are roughly 3x more predictive than engagement alone. This aligns with industry research showing that payment behaviour is the strongest leading indicator of churn.

Real-Time Enrichment Pipeline

Signals flow through a real-time enrichment pipeline that updates customer context as events occur:

Event (login, document, payment) → Signal Engine → Score Update → Sidecar Write → Agent ContextWhen an agent interacts with a customer, it receives enriched context including current risk tier, service propensity scores, accumulated conversion signals, and recent activity patterns. The agent doesn’t query raw databases. It receives pre-computed intelligence.

Adaptive Scoring

The engine adapts to context:

- Seasonal adjustment: Reduced engagement scoring during Australian quiet periods (Dec 15 to Jan 15)

- Service-type awareness: Virtual office customers have different engagement expectations than legal advice subscribers

- Sigmoid calibration: Raw scores are calibrated to true probabilities

- Value at Risk: High-value customers get priority intervention recommendations

- Decay functions: Older signals contribute less than recent ones

This seasonal awareness is critical. Without it, we’d flag every customer as high churn risk during the Christmas period, generating false positives that erode trust in the system.

5. Cortex Orchestrator: Unified Batch Processing

For non-real-time workloads, we use a batch orchestration pattern that generates multiple outputs in a single LLM call per user.

Queues (PQL, Churn, Vector) → Merge by userId → Build Context → Batch API → Fan OutThis architecture emerged from a practical problem: we were making separate AI calls for sales briefs, churn summaries, and vector narratives. Three calls per user, duplicating context loading each time. The orchestrator merges these into one:

const BATCH_CONFIG: BatchConfig = {

temperature: 0.3,

maxTokens: 2000,

responseFormat: 'json_object',

promptCacheKey: 'orchestrator-v1',

};After batch completion, results fan out to notification queues and vector storage. The consistent promptCacheKey ensures high cache hit rates across batch items, so each user’s request benefits from the cached system prompt.

Cost impact: Batch processing with prompt caching reduced our per-user enrichment cost by approximately 60% compared to individual real-time calls.

6. Journey Tracking and ML Training Pipeline

Beyond real-time scoring, we capture complete customer journeys for training predictive models. This implements what the research literature calls “experience replay for learning”, using historical trajectories to improve future predictions.

export type JourneyMilestone =

| 'lead_created'

| 'onboarding_completed'

| 'first_document_completed'

| 'first_call_completed'

| 'first_plan_activated'

| 'first_brief_paid'

| 'high_pql_achieved'

| 'became_vip';

export interface CustomerJourney {

userId: string;

milestones: Record<JourneyMilestone, string>;

touchpoints: Touchpoint[];

signalsAtMilestones: Record<string, MilestoneSignals>;

conversions?: ConversionMetrics;

}Each journey captures milestone timestamps, chronological touchpoints, score snapshots at each milestone for feature engineering, and conversion metrics (days to convert, touches before conversion).

Journeys are stored in S3 with date-partitioned structure:

cortex/training/journeys/2026/01/21/{journeyId}.json

cortex/training/outcomes/2026/01/{userId}_30d.jsonOutcome labels are captured 30, 60, and 90 days after journey snapshots, recording whether the customer churned, grew MRR, or added new plans. This creates a supervised learning dataset where features (signals at milestones) are paired with ground-truth outcomes.

This data feeds XGBoost-based prediction models on SageMaker:

| Model | Purpose |

|---|---|

| Churn classifier | Predicts 30-day churn probability using journey features |

| Conversion propensity | Scores free users’ likelihood to convert |

| Expansion predictor | Identifies customers likely to add services |

Model explainability is critical for customer-facing decisions. We integrate SageMaker Clarify to generate SHAP values:

Churn prediction: 0.73 (High Risk)

├── billing_health: -0.28 (2 failed payments)

├── engagement_trend: -0.19 (declining logins)

├── days_since_last_call: -0.12 (no contact in 45 days)

└── document_completion_rate: +0.08 (positive signal)This transparency allows customer success teams to understand why a customer is flagged, enabling targeted interventions rather than generic outreach.

7. Two-Phase Structured Output

When we need both flexible tool use and guaranteed JSON output, we use a two-phase approach. This addresses a practical limitation: tools and structured output can conflict in some LLM providers.

Phase 1: Tool Use Loop (no structured output)

const response = await cortex.complete({

tools,

toolChoice: 'auto',

messages,

});Phase 2: Structured Output

const structuredResponse = await cortex.complete({

outputFormat: {

type: 'json_schema',

schema: RETENTION_CONTENT_SCHEMA,

},

messages: [{ role: 'user', content: contextWithToolResults }],

});The first phase gathers information through tool use; the second phase formats it. This separation ensures we get both flexible information gathering and reliable output structure.

8. Streaming Agents and Event-Driven Orchestration

For real-time conversations, we stream responses with Server-Sent Events:

responseStream.write(`data: ${JSON.stringify({ type: 'start' })}\n\n`);

for await (const event of cortexStream) {

if (event.type === 'content_delta') {

responseStream.write(`data: ${JSON.stringify({

type: 'content',

content: event.delta.text,

})}\n\n`);

}

}

responseStream.write(`data: ${JSON.stringify({ type: 'complete' })}\n\n`);We track performance markers throughout the stream: time-to-first-token, thinking duration, and search latency. These metrics drive continuous optimisation.

Event-Driven Agent Orchestration

For autonomous workflows, we use message-triggered agents:

Message Queue (Churn Signal) → Worker → Gather Context → Validate → Generate → Storage → NotifyThe retention worker demonstrates this pattern:

- Receive churn risk signal via message queue

- Gather customer data (profiles, calls, documents)

- Validate context sufficiency with AI

- Generate personalised retention email

- Apply confidence threshold (skip if < 0.8)

- Save to storage and notify via Slack

Cooldown logic prevents over-contacting: we skip users who received retention outreach within 60 days. This implements what the research calls “human-in-the-loop by design”. The system recommends, but respects boundaries.

9. Memory, Observability, and Continuous Improvement

Our memory and observability layer forms a closed loop: conversations are stored, traced, scored, and fed back into model improvement. This implements the Self-Refine principle at the system level. Not just individual responses, but the entire system improves through feedback.

Thread-Based Conversation Storage

Conversations use a thread-based schema supporting efficient querying:

PK: THREAD:{threadId}

SK: MESSAGE:{messageId}

GSI_1_PK: USER:{userId}

GSI_1_SK: THREAD:{type}:{threadId}Observability Tracing

Every agent interaction is traced end-to-end:

const trace = observability.trace({

name: 'ask-cortex',

sessionId: threadId,

userId: userId,

input: userMessage,

metadata: {

thinking: thinkingEnabled,

promptVersion: currentPromptVersion,

},

});Within each trace, we create generations for LLM calls and spans for tool executions. This gives visibility into token usage, tool latency, streaming performance, and cost attribution by user, session, and prompt version.

Feedback Loops and Reinforced Fine-Tuning

User feedback flows back into improvement:

User Feedback → Trace Score → Dataset Export → Fine-Tuning PipelineExplicit feedback: Users rate responses, attaching scores to traces.

Implicit signals: Did the user ask a follow-up? (suggests incomplete answer) Did they copy the response? (suggests useful content) Did they book a consultation after? (suggests high-value interaction)

High-scored traces form the basis of our fine-tuning dataset:

- Filter by score: Export traces where user rating ≥ 4 or positive implicit signals

- Curate examples: Review for quality, remove PII, ensure diverse coverage

- Format for training: Convert to instruction-response pairs

- Fine-tune: Run training jobs on base models

- Evaluate: Compare against baseline on held-out test set

- Deploy: Roll out with prompt version tracking

This closes the loop: user interactions generate traces, traces are scored, high-quality examples become training data, improved models serve future users.

10. Sidecar Pattern: Progressive Signal Updates

One of our most impactful patterns is the User Sidecar Store: a per-user JSON file that accumulates signals progressively without requiring schema changes to our primary datastore.

Storage Path: cortex/signals/ai-sidecar/latest/<userId>.jsonThe sidecar holds multiple data sections, each updated independently:

| Section | TTL | Purpose |

|---|---|---|

| Engagement Cache | 12h | PostHog metrics (logins, events, activity) |

| Conversion Signals | ∞ | Accumulated high-intent events |

| PQL Data | 24h | AI-generated propensity scores |

| Churn Data | 24h | Risk drivers, CPI scoring, RFM segments |

| Document Context | 2h | Temporary storage until batch processes |

| Watermarks | ∞ | Processing state to prevent duplicates |

The key insight: each handler updates only its section, merging with existing data:

export async function saveSidecar(

userId: string,

updates: Partial<UserSidecar>

): Promise<void> {

const existing = await getSidecar(userId);

const sidecar: UserSidecar = {

...existing,

...updates,

userId,

updatedAt: new Date().toISOString(),

watermarks: {

...existing?.watermarks,

...updates.watermarks,

},

};

await storage.put(getSidecarKey(userId), sidecar);

}This pattern solves several problems:

Schema Evolution: Adding new AI enrichment fields doesn’t require database migrations. We add fields to the sidecar and start writing immediately.

Progressive Accumulation: Conversion signals accumulate over time. When a user views pricing, that signal is preserved as newer events arrive.

Guardrails via Watermarks: The watermarks section prevents duplicate processing. Before queuing a user for batch enrichment, we check if they’ve already been processed.

Decoupled Processing: Real-time handlers save context to the sidecar; batch workers read it later. This decoupling prevents real-time paths from blocking on expensive AI operations.

Key Design Principles

Several principles guide Cortex architecture:

Prompt Caching: Static system prompts are cached at the LLM provider level. We mark static content with cache controls and use consistent cache keys for batch requests. This aligns with Anthropic’s guidance on maximising cache efficiency.

Batch vs Real-time: High-latency tasks use batch APIs (significant cost reduction); user-facing interactions stream responses. The cost difference is substantial. Batch processing with caching can be 60%+ cheaper.

Confidence Scoring: Multiple validation gates before taking action. We’d rather skip a low-confidence intervention than send something wrong. This implements what Andrew Ng calls “disciplined evaluation”, the biggest predictor of production success.

Observability: Distributed tracing, structured logging, and team notifications let us monitor agent behaviour in production. When something goes wrong, we can trace exactly which tool returned bad data or which prompt version caused the regression.

Seasonal Awareness: Australian business has distinct quiet periods. Our scoring models adjust, preventing false-positive churn alerts during holidays when reduced engagement is expected.

References

-

Yao, S., et al. (2023). “ReAct: Synergizing Reasoning and Acting in Language Models.” ICLR 2023. arxiv.org/abs/2210.03629

-

Madaan, A., et al. (2023). “Self-Refine: Iterative Refinement with Self-Feedback.” NeurIPS 2023. arxiv.org/abs/2303.17651

-

Ng, A. (2024). “Four Design Patterns for AI Agentic Workflows.” DeepLearning.AI. deeplearning.ai/courses/agentic-ai

-

Anthropic. (2024). “Introducing the Model Context Protocol.” anthropic.com/news/model-context-protocol

-

Microsoft Azure. (2025). “Agent Factory: The New Era of Agentic AI.” azure.microsoft.com/en-us/blog/agent-factory-the-new-era-of-agentic-ai-common-use-cases-and-design-patterns/

-

Voyage AI. (2024). “Domain-Specific Embeddings for Legal and Financial Text.” docs.voyageai.com

These patterns have evolved through real production use. Each solves a specific problem we encountered as we scaled Lawpath Cortex from prototype to serving hundreds of thousands of Australian businesses. We hope sharing them, alongside the research foundations, helps other teams building agentic systems.

The Lawpath Engineering Team